[QUOTE="Netherscourge"]

[QUOTE="XBOunity"]

lol muddy the waters and try hard. i think thats a stretch on your part. I do believe in that CPU being a bottleneck. they do exist ya know.

XBOunity

The CPU is not a bottleneck on a PS4, since the PS4 runs games on a GPU shell and allows low-level API access; similar to what AMD is implementing in PC GPUs in their Mantel initiative. Skipping right over OpenGL/DirectX and going straight into the GPU chipset itself.

If anything, the XB1's CPU will be a bottleneck since it's running on a Windows 8 Kernel and will require DirectX API access to the GPU for game processing.

I don't think many people have realized this yet. Microsoft has, which is why they tried to boost their Jaguar's CPU clock a little to compensate. And then there's all that Kinect stuff that's gotta be processed eating up CPU cycles.

Bottom line: The XB1 is more dependant on it's CPU than the PS4 is.

But hey, keep ignoring the facts and dig up more slides from 2012 if it makes you happy.

;)

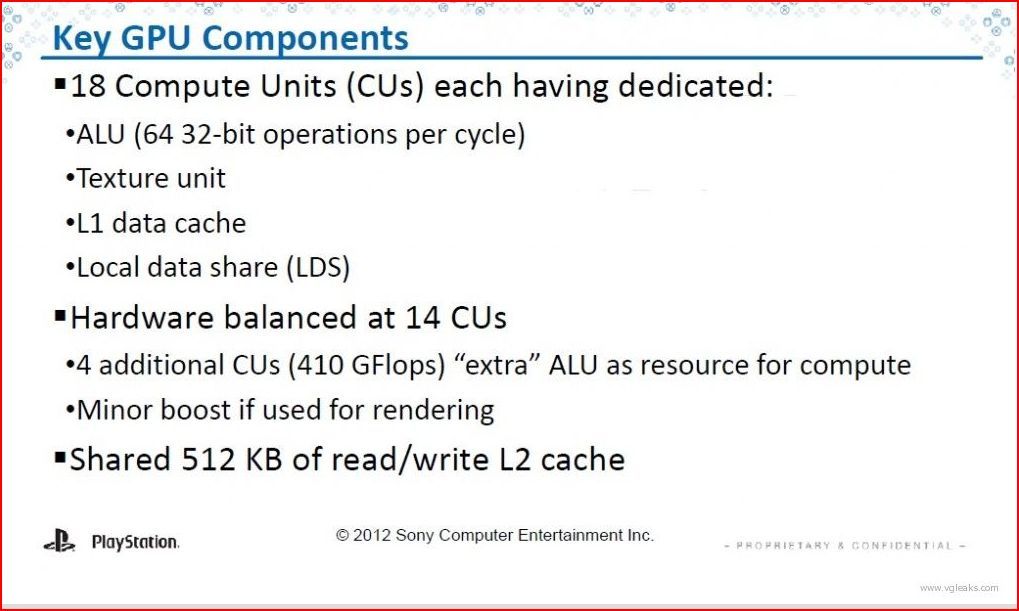

oh you have done testing on this? would agree with you if the new vgleak didnt say otherwise. also didnt killzone dev diary for shadowfall show only 14 cu's in use suggested by Digital foundry? or they are fanboys too ? Just wondering.

I have no idea how many CUs any console is using. All I know is that the XB1 will be bottlenecked more by it's CPU than the PS4 will because of how it's designed to use it's Win8 OS and DX API.

Log in to comment